We are happy to announce that our paper “Asymmetries in the processing of vowel height” will be appearing in the Journal of Speech, Language, & Hearing Research, authored by Philip Monahan, William Idsardi and Mathias Scharinger. A short summary is given below:

Purpose: Speech perception can be described as the transformation of continuous acoustic information into discrete memory representations. Therefore, research on neural representations of speech sounds is particularly important for a better understanding of this transformation. Speech perception models make specific assumptions regarding the representation of mid vowels (e.g., [ ]) that are articulated with a neutral position in regard to height. One hypothesis is that their representation is less specific than the representation of vowels with a more specific position (e.g., [æ]).

]) that are articulated with a neutral position in regard to height. One hypothesis is that their representation is less specific than the representation of vowels with a more specific position (e.g., [æ]).

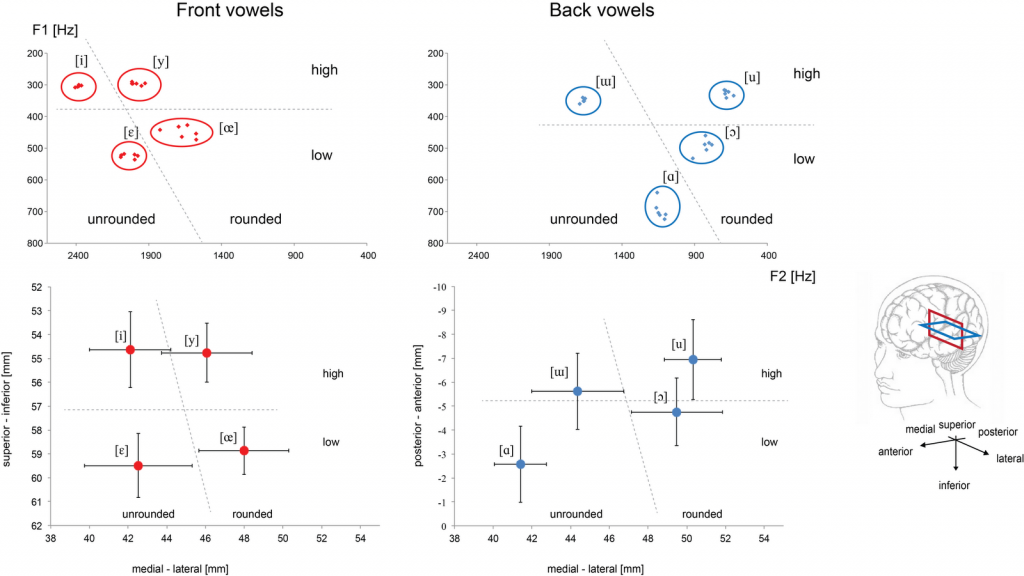

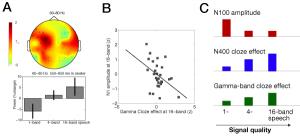

Method: In a magnetoencephalography study, we tested the underspecification of mid vowel in American English. Using a mismatch negativity (MMN) paradigm, mid and low lax vowels ([ ]/[æ]), and high and low lax vowels ([I]/[æ]), were opposed, and M100/N1 dipole source parameters as well as MMN latency and amplitude were examined.

]/[æ]), and high and low lax vowels ([I]/[æ]), were opposed, and M100/N1 dipole source parameters as well as MMN latency and amplitude were examined.

Results: Larger MMNs occurred when the mid vowel [ ] was a deviant to the standard [æ], a result consistent with less specific representations for mid vowels. MMNs of equal magnitude were elicited in the high–low comparison, consistent with more specific representations for both high and low vowels. M100 dipole locations support early vowel categorization on the basis of linguistically relevant acoustic–phonetic features.

] was a deviant to the standard [æ], a result consistent with less specific representations for mid vowels. MMNs of equal magnitude were elicited in the high–low comparison, consistent with more specific representations for both high and low vowels. M100 dipole locations support early vowel categorization on the basis of linguistically relevant acoustic–phonetic features.

Conclusion: We take our results to reflect an abstract long-term representation of vowels that do not include redundant specifications at very early stages of processing the speech signal. Moreover, the dipole locations indicate extraction of distinctive features and their mapping onto representationally faithful cortical locations (i.e., a feature map).

[Update]

The paper is available here.

References

- Scharinger M, Monahan PJ, Idsardi WJ. Asymmetries in the processing of vowel height. J Speech Lang Hear Res. 2012 Jun;55(3):903–18. PMID: 22232394. [Open with Read]

Speech perception can be described as the transformation of continuous acoustic information into discrete memory representations. Therefore, research on neural representations of speech sounds is part […]

]) that are articulated with a neutral position in regard to height. One hypothesis is that their representation is less specific than the representation of vowels with a more specific position (e.g., [æ]).

]) that are articulated with a neutral position in regard to height. One hypothesis is that their representation is less specific than the representation of vowels with a more specific position (e.g., [æ]).