Background: Goal-directed behaviour in temporally dynamic environments requires to focus on relevant information and to not get distracted by irrelevant information. To achieve this, two cognitive processes are necessary: On the one hand, attentional sampling of target stimuli has been focus of extensive research. On the other hand, it is less well known how the human neural system exploits temporal information in the stimulus to filter out distraction. In the present project, we use the auditory modality as a test case to study the temporal dynamics of attentional filtering and its neural implementation.

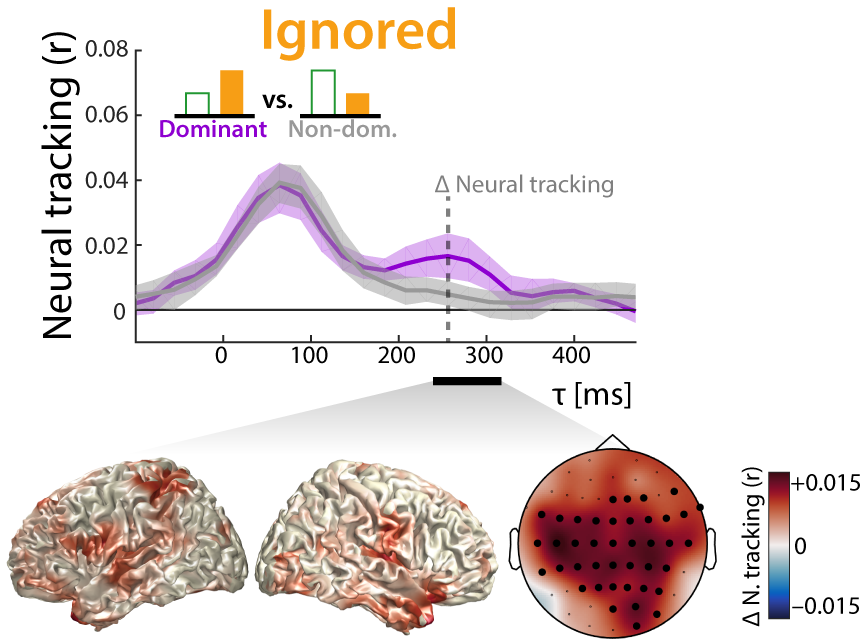

Approach and general hypothesis: In three variants of the “Irrelevant-Sound Task” we will manipulate temporal aspects of auditory distractors. Behavioural recall of target stimuli despite distraction and responses in the electroencephalogram (EEG) will reflect the integrity and neural implementation of the attentional filter. In line with preliminary research, our general hypothesis is that attentional filtering bases on similar but sign-reversed mechanisms as attentional sampling: For instance, while attention to rhythmic stimuli increases neural sensitivity at time points of expected target occurrence, filtering of distractors should instead decrease neural sensitivity at the time of expected distraction.

Work programme: In each one of three Work Packages (WPs), we will take as a model an established neural mechanism of attentional sampling and test the existence and neural implementation of a similar mechanism for attentional filtering. This way, we will investigate whether attentional filtering follows an intrinsic rhythm (WP1), whether rhythmic distractors can entrain attentional filtering (WP2), and whether foreknowledge about the time of distraction induces top-down tuning of the attentional filter in frontal cortex regions (WP3).

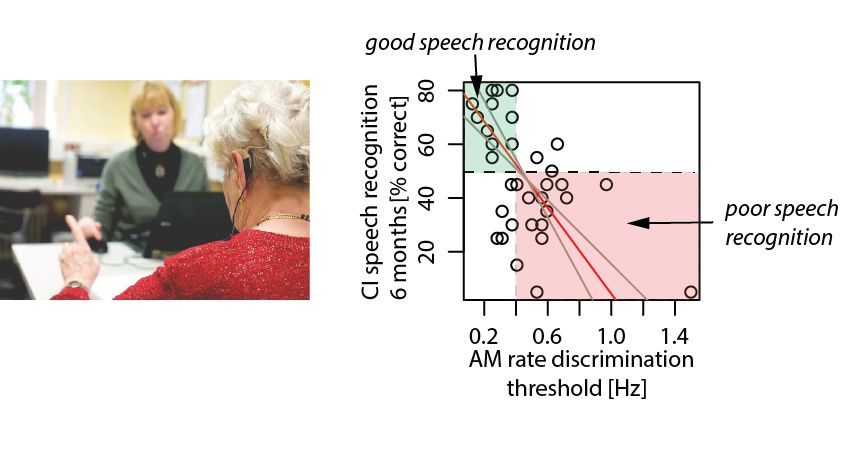

Objectives and relevance: The primary objective of this research is to contribute to the foundational science on human selective attention, which requires a comprehensive understanding of how the neural system achieves the task of filtering out distraction. Furthermore, hearing difficulties often base on distraction by salient but irrelevant sound. Results of this research will translate to the development of hearing aids that take into account neuro-cognitive mechanisms to filter out distraction more efficiently.