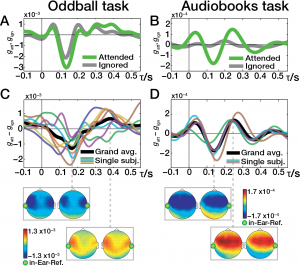

Towards a brain-controlled hearing aid: PhD student Lorenz Fiedler shows how attended and ignored auditory streams are differently represented in the neural responses and how the focus of auditory attention can be extracted from EEG signals recorded at electrodes placed inside the ear-canal and around the ear.

Abstract

Objective. Conventional, multi-channel scalp electroencephalography (EEG) allows the identification of the attended speaker in concurrent-listening (‘cocktail party’) scenarios. This implies that EEG might provide valuable information to complement hearing aids with some form of EEG and to install a level of neuro-feedback. Approach. To investigate whether a listener’s attentional focus can be detected from single-channel hearing-aid-compatible EEG configurations, we recorded EEG from three electrodes inside the ear canal (‘in-Ear-EEG’) and additionally from 64 electrodes on the scalp. In two different, concurrent listening tasks, participants ( n = 7) were fitted with individualized in-Ear-EEG pieces and were either asked to attend to one of two dichotically-presented, concurrent tone streams or to one of two diotically-presented, concurrent audiobooks. A forward encoding model was trained to predict the EEG response at single EEG channels. Main results. Each individual participants’ attentional focus could be detected from single-channel EEG response recorded from short-distance configurations consisting only of a single in-Ear-EEG electrode and an adjacent scalp-EEG electrode. The differences in neural responses to attended and ignored stimuli were consistent in morphology (i.e. polarity and latency of components) across subjects. Significance. In sum, our findings show that the EEG response from a single-channel, hearing-aid-compatible configuration provides valuable information to identify a listener’s focus of attention.

Related

- New preprint paper: Fiedler et al. on predicting focus of attention from in-ear EEG

- New paper in press in Brain Stimulation: Wöstmann, Vosskuhl, Obleser, and Herrmann demonstrate that externally amplified oscillations affect auditory spatial attention

- [UPDATE] New paper in PNAS: Spatiotemporal dynamics of auditory attention synchronize with speech, Woestmann et al.

- New paper in press in Cerebral Cortex: Wöstmann et al. on ignoring degraded speech