Congratulations to Obleserlab postdoc Julia Erb for her new paper to appear in eLife, “Temporal selectivity declines in the aging human auditory cortex”.

It’s a trope that older listeners struggle more in comprehending speech (think of Professor Tournesol in the famous Tintin comics!). The neurobiology of why and how ageing and speech comprehension difficulties are linked at all has proven much more elusive, however.

Part of this lack of knowledge is directly rooted in our limited understanding of how the central parts of the hearing brain – auditory cortex, broadly speaking – are organized.

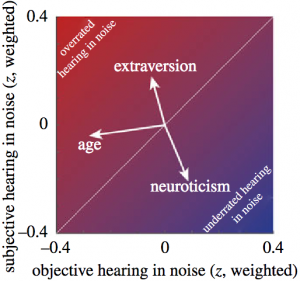

Does auditory cortex of older adults have different tuning properties? That is, do young and older adults differ in the way their auditory subfields represent certain features of sound?

A specific hypothesis following from this, derived from what is known about age-related change in neurobiological and psychological processes in general (the idea of so-called “dedifferentiation”), was that the tuning to certain features would “broaden” and thus lose selectivity in older compared to younger listeners.

More mechanistically, we aimed to not only observe so-called “cross-sectional” (i.e., age-group) differences, but to link a listener’s chronological age as closely as possible to changes in cortical tuning.

Amongst older listeners, we observe that temporal-rate selectivity declines with higher age. In line with senescent neural dedifferentiation more generally, our results highlight decreased selectivity to temporal information as a hallmark of the aging auditory cortex.

![]()

This research is generously supported by the ERC Consolidator project AUDADAPT, and data for this study were acquired at the CBBM at University of Lübeck.

![]()