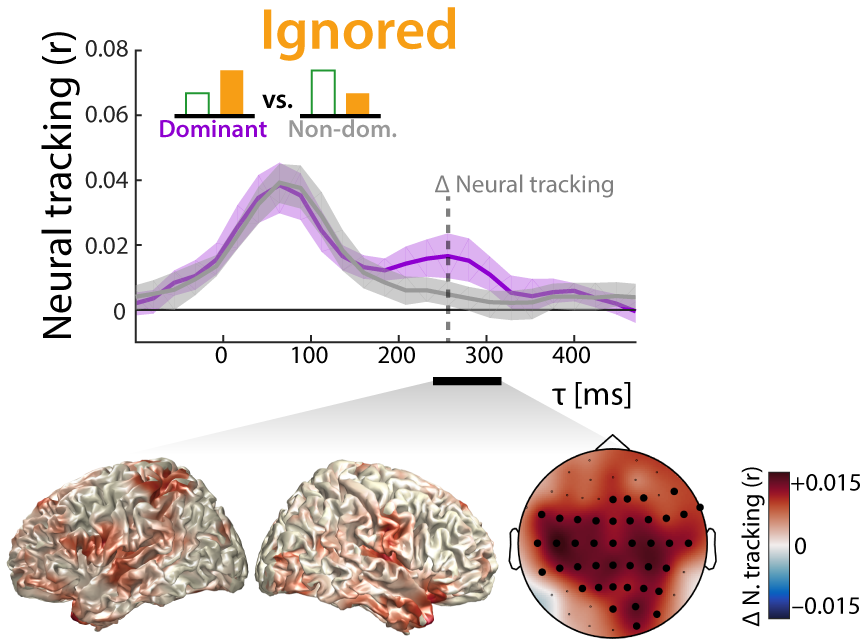

Listening requires selective neural processing of the incoming sound mixture, which in humans is borne out by a surprisingly clean representation of attended-only speech in auditory cortex. How this neural selectivity is achieved even at negative signal-to-noise ratios (SNR) remains unclear. We show that, under such conditions, a late cortical representation (i.e., neural tracking) of the ignored acoustic signal is key to successful separation of attended and distracting talkers (i.e., neural selectivity). We recorded and modeled the electroencephalographic response of 18 participants who attended to one of two simultaneously presented stories, while the SNR between the two talkers varied dynamically between +6 and −6 dB. The neural tracking showed an increasing early-to-late attention-biased selectivity. Importantly, acoustically dominant (i.e., louder) ignored talkers were tracked neurally by late involvement of fronto-parietal regions, which contributed to enhanced neural selectivity. This neural selectivity, by way of representing the ignored talker, poses a mechanistic neural account of attention under real-life acoustic conditions.

The paper is available here.