Obleserlab senior PhD student Leo Waschke, alongside co-authors Sarah Tune and Jonas Obleser, has a new paper in eLife.

The processing of sensory information from our environment is not constant but rather varies with changes in ongoing brain activity, or brain states. Thus, also the acuity of perceptual decisions depends on the brain state during which sensory information is processed. Recent work in non-human animals suggests two key processes that shape brain states relevant for sensory processing and perceptual performance. On the one hand, the momentary level of neural desynchronization in sensory cortical areas has been shown to impact neural representations of sensory input and related performance. On the other hand, the current level of arousal and related noradrenergic activity has been linked to changes in sensory processing and perceptual acuity.

However, it is unclear at present, whether local neural desynchronization and arousal pose distinct brain states that entail varying consequences for sensory processing and behaviour or if they represent two interrelated manifestations of ongoing brain activity and jointly affect behaviour. Furthermore, the exact shape of the relationship between perceptual performance and each of both brain states markers (e.g. linear vs. quadratic) is unclear at present.

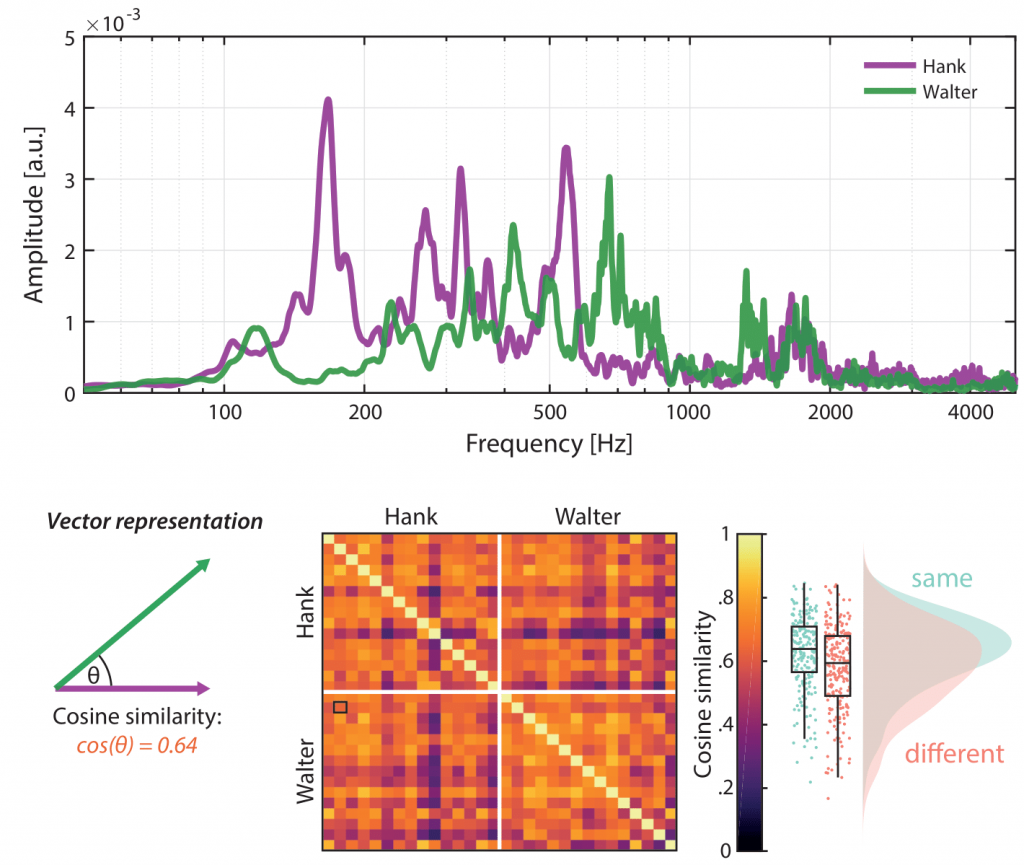

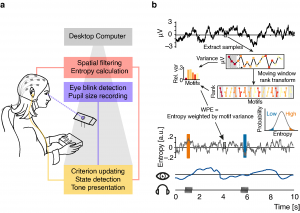

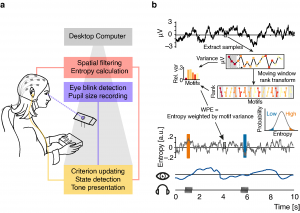

In order to transfer findings from animal physiology to human cognitive neuroscience and test the exact shape of unique as well as shared influences of local cortical desynchronization and global arousal on sensory processing and perceptual performance, we recorded electroencephalography and pupillometry in 25 human participants while they performed a challenging auditory discrimination task.

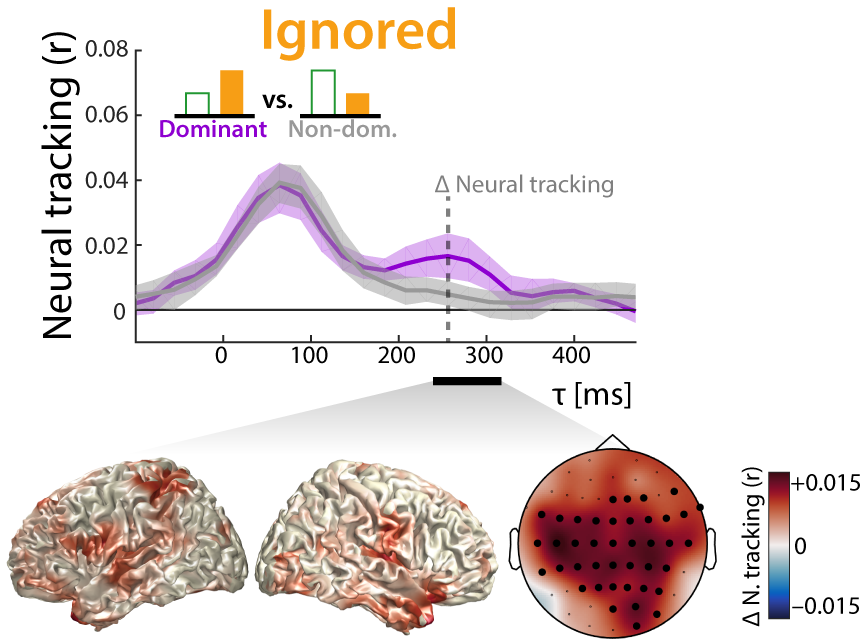

Importantly, auditory stimuli were selectively presented during periods of especially high or low auditory cortical desynchronization as approximated by an information theoretic measure of time-series complexity (weighted permutation entropy). By means of a closed-loop real time setup we were not only able to present stimuli during different desynchronization states but also made sure to sample the whole distribution of such states, a prerequisite for the accurate assessment of brain-behaviour relationships. The recorded pupillometry data additionally enabled us to draw inferences regarding the current level of arousal due to the established link between noradrenergic activity and pupil size.

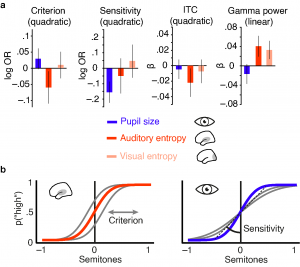

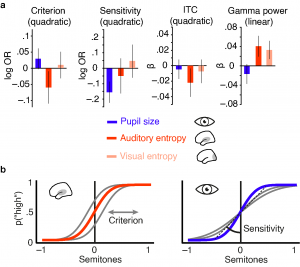

Single trial analyses of EEG activity, pupillometry and behaviour revealed clearly dissociable influences of both brain state markers on ongoing brain activity, early sound-related activity and behaviour. High desynchronization states were characterized by a pronounced reduction in oscillatory power across a wide frequency range while high arousal states coincided with a decrease in oscillatory power that was limited to high frequencies. Similarly, early sound-evoked activity was differentially impacted by auditory cortical desynchronization and pupil-linked arousal. Phase-locked responses and evoked gamma power increased with local desynchronization with a tendency to saturate at intermediate levels. Post-stimulus low frequency power on the other hand, increased with pupil-linked arousal.

Most importantly, local desynchronization and pupil-linked arousal displayed different relationships with perceptual performance. While participants performed fastest and least biased following intermediate levels of auditory cortical desynchronization, intermediate levels of pupil-linked arousal were associated with highest sensitivity. Thus, although both processes pose behaviourally relevant brain states that affect perceptual performance following an inverted u, they impact distinct subdomains of behaviour. Taken together, our results speak to a model in which independent states of local desynchronization and global arousal jointly shape states for optimal sensory processing and perceptual performance. The published manuscript including all supplemental information can be found here.