I am also delighted to report the fruits of a very recent collaboration with Nathan Weisz and his OBOB lab at the University of Konstanz, Germany.

Alpha Rhythms in Audition: Cognitive and Clinical Perspectives

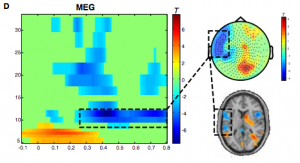

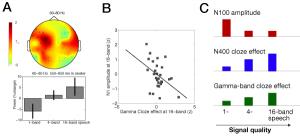

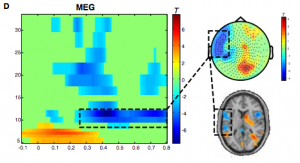

In this review paper, which appears in the new, exciting “Frontiers in Psychology” journal, we sum the recent evidence that alpha oscillations (here broadly defined from 6 to 13 Hz) are playing a very interesting role in the auditory system, just as they do in the visual and the somatosensory system.

In essence, we back Ole Jensen’s and others’ quite parimonious idea of alpha as a functional inhibition / gating system across cortical areas.

From our own lab, preliminary data from two recent experiments is included: On the role of alpha osillations as a potential marker for speech intelligibility and its acoustic determinants, as well as on speech degradation and working memory load and their combined reflection in alpha power increases.

NB — the final pdf is still lacking, and Front Psychol is still not listed in PubMed. This should not stop you from submitting to their exciting new journals, as the review process is very fair and efficient and the outreach via free availability promises to be considerable.

References

- Weisz N, Hartmann T, Müller N, Lorenz I, Obleser J. Alpha rhythms in audition: cognitive and clinical perspectives. Front Psychol. 2011 Apr 26;2:73. PMID: 21687444. [Open with Read]

Like the visual and the sensorimotor systems, the auditory system exhibits pronounced alpha-like resting oscillatory activity. Due to the relatively small spatial extent of auditory cortical areas, th […]