We are honoured and delighted that the Deutsche Forschungsgemeinschaft has deemed two of our recent applications worthy of funding: The two senior researchers in the lab, Sarah Tune and Malte Wöstmann, have both been awarded three-year grant funding for their new projects. Congratulations!

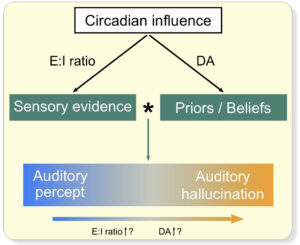

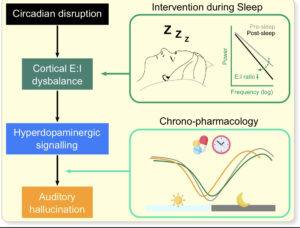

In her 3‑year, 360‑K€ project “How perceptual inference changes with age: Behavioural and brain dynamics of speech perception”, Sarah Tune will explore the role of perceptual priors in speech perception in the ageing listener. She will mainly use neural and perceptual modelling and functional neuroimaging.

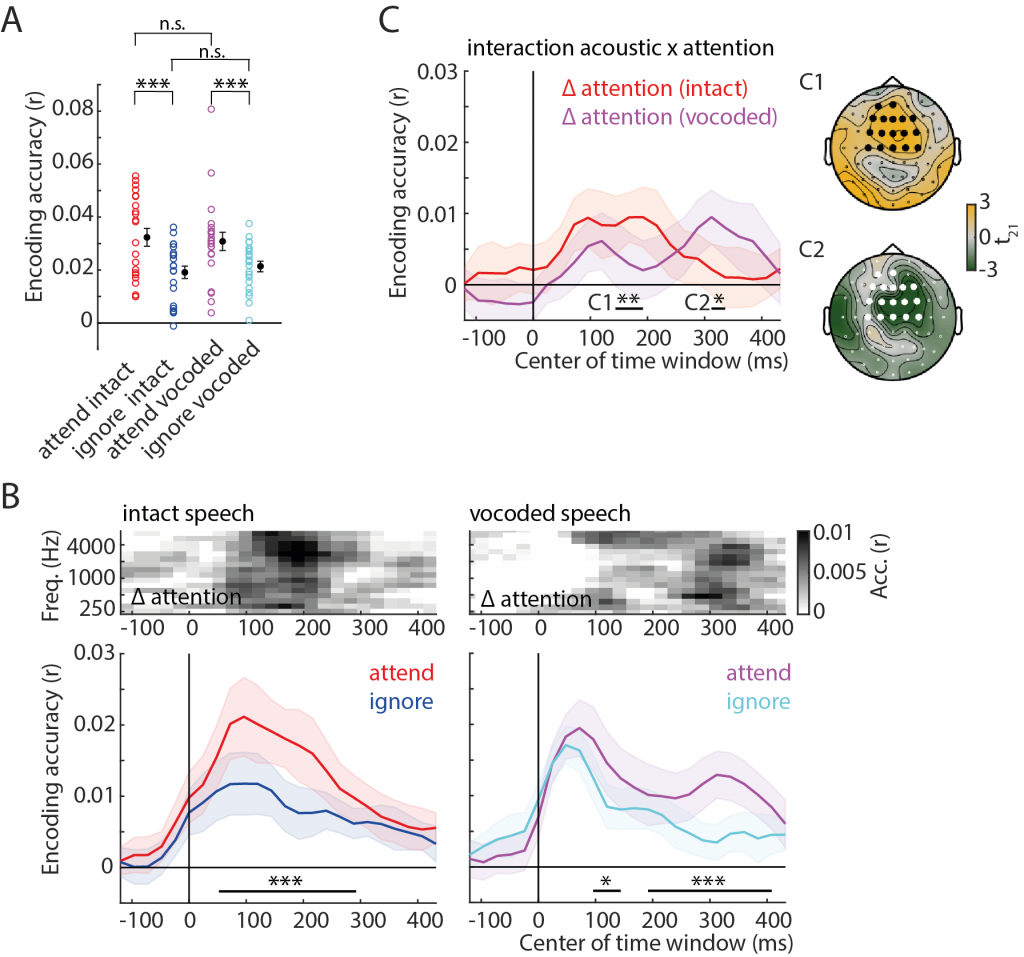

In his 3‑year, 270‑K€ project “Investigation of capture and suppression in auditory attention”, Malte Wöstmann will continue and refine his successful research endeavour into dissociating the role of suppressive mechanisms in the listening mind and brain, mainly using EEG and behavioural modelling.

Both of them will soon advertise posts for PhD candidates to join us, accordingly, and to work on these exciting projects with Sarah and Malte and the rest of the Obleserlab team