We are excited to share that former Obleserlab PhD student Leo Waschke, together with his new (Doug Garrett, Niels Kloosterman) and old (Jonas Obleser) lab has published an in-depth perspective piece in Neuron, with the provocative title “Behavior need neural variability”.

Our article is essentially a long and extensive tribute to the “second moment” of neural activity, in statistical terms, essentially: Variability — be it quantified as variance, entropy, or spectral slope — is the long-neglected twin of averages, and it holds great promise in understanding neural states (how does neural activity differ from one moment to the next?) and traits (how do individuals differ from each other?).

Congratulations, Leo!

Category: Papers

Congratulations to Obleserlab postdoc Julia Erb for her new paper to appear in eLife, “Temporal selectivity declines in the aging human auditory cortex”.

It’s a trope that older listeners struggle more in comprehending speech (think of Professor Tournesol in the famous Tintin comics!). The neurobiology of why and how ageing and speech comprehension difficulties are linked at all has proven much more elusive, however.

Part of this lack of knowledge is directly rooted in our limited understanding of how the central parts of the hearing brain – auditory cortex, broadly speaking – are organized.

Does auditory cortex of older adults have different tuning properties? That is, do young and older adults differ in the way their auditory subfields represent certain features of sound?

A specific hypothesis following from this, derived from what is known about age-related change in neurobiological and psychological processes in general (the idea of so-called “dedifferentiation”), was that the tuning to certain features would “broaden” and thus lose selectivity in older compared to younger listeners.

More mechanistically, we aimed to not only observe so-called “cross-sectional” (i.e., age-group) differences, but to link a listener’s chronological age as closely as possible to changes in cortical tuning.

Amongst older listeners, we observe that temporal-rate selectivity declines with higher age. In line with senescent neural dedifferentiation more generally, our results highlight decreased selectivity to temporal information as a hallmark of the aging auditory cortex.

This research is generously supported by the ERC Consolidator project AUDADAPT, and data for this study were acquired at the CBBM at University of Lübeck.

Obleserlab senior PhD student Leo Waschke, alongside co-authors Sarah Tune and Jonas Obleser, has a new paper in eLife.

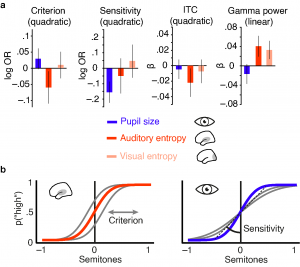

The processing of sensory information from our environment is not constant but rather varies with changes in ongoing brain activity, or brain states. Thus, also the acuity of perceptual decisions depends on the brain state during which sensory information is processed. Recent work in non-human animals suggests two key processes that shape brain states relevant for sensory processing and perceptual performance. On the one hand, the momentary level of neural desynchronization in sensory cortical areas has been shown to impact neural representations of sensory input and related performance. On the other hand, the current level of arousal and related noradrenergic activity has been linked to changes in sensory processing and perceptual acuity.

However, it is unclear at present, whether local neural desynchronization and arousal pose distinct brain states that entail varying consequences for sensory processing and behaviour or if they represent two interrelated manifestations of ongoing brain activity and jointly affect behaviour. Furthermore, the exact shape of the relationship between perceptual performance and each of both brain states markers (e.g. linear vs. quadratic) is unclear at present.

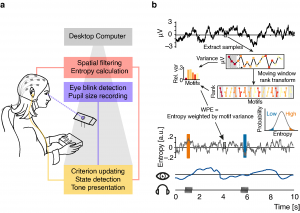

In order to transfer findings from animal physiology to human cognitive neuroscience and test the exact shape of unique as well as shared influences of local cortical desynchronization and global arousal on sensory processing and perceptual performance, we recorded electroencephalography and pupillometry in 25 human participants while they performed a challenging auditory discrimination task.

Importantly, auditory stimuli were selectively presented during periods of especially high or low auditory cortical desynchronization as approximated by an information theoretic measure of time-series complexity (weighted permutation entropy). By means of a closed-loop real time setup we were not only able to present stimuli during different desynchronization states but also made sure to sample the whole distribution of such states, a prerequisite for the accurate assessment of brain-behaviour relationships. The recorded pupillometry data additionally enabled us to draw inferences regarding the current level of arousal due to the established link between noradrenergic activity and pupil size.

Single trial analyses of EEG activity, pupillometry and behaviour revealed clearly dissociable influences of both brain state markers on ongoing brain activity, early sound-related activity and behaviour. High desynchronization states were characterized by a pronounced reduction in oscillatory power across a wide frequency range while high arousal states coincided with a decrease in oscillatory power that was limited to high frequencies. Similarly, early sound-evoked activity was differentially impacted by auditory cortical desynchronization and pupil-linked arousal. Phase-locked responses and evoked gamma power increased with local desynchronization with a tendency to saturate at intermediate levels. Post-stimulus low frequency power on the other hand, increased with pupil-linked arousal.

Most importantly, local desynchronization and pupil-linked arousal displayed different relationships with perceptual performance. While participants performed fastest and least biased following intermediate levels of auditory cortical desynchronization, intermediate levels of pupil-linked arousal were associated with highest sensitivity. Thus, although both processes pose behaviourally relevant brain states that affect perceptual performance following an inverted u, they impact distinct subdomains of behaviour. Taken together, our results speak to a model in which independent states of local desynchronization and global arousal jointly shape states for optimal sensory processing and perceptual performance. The published manuscript including all supplemental information can be found here.

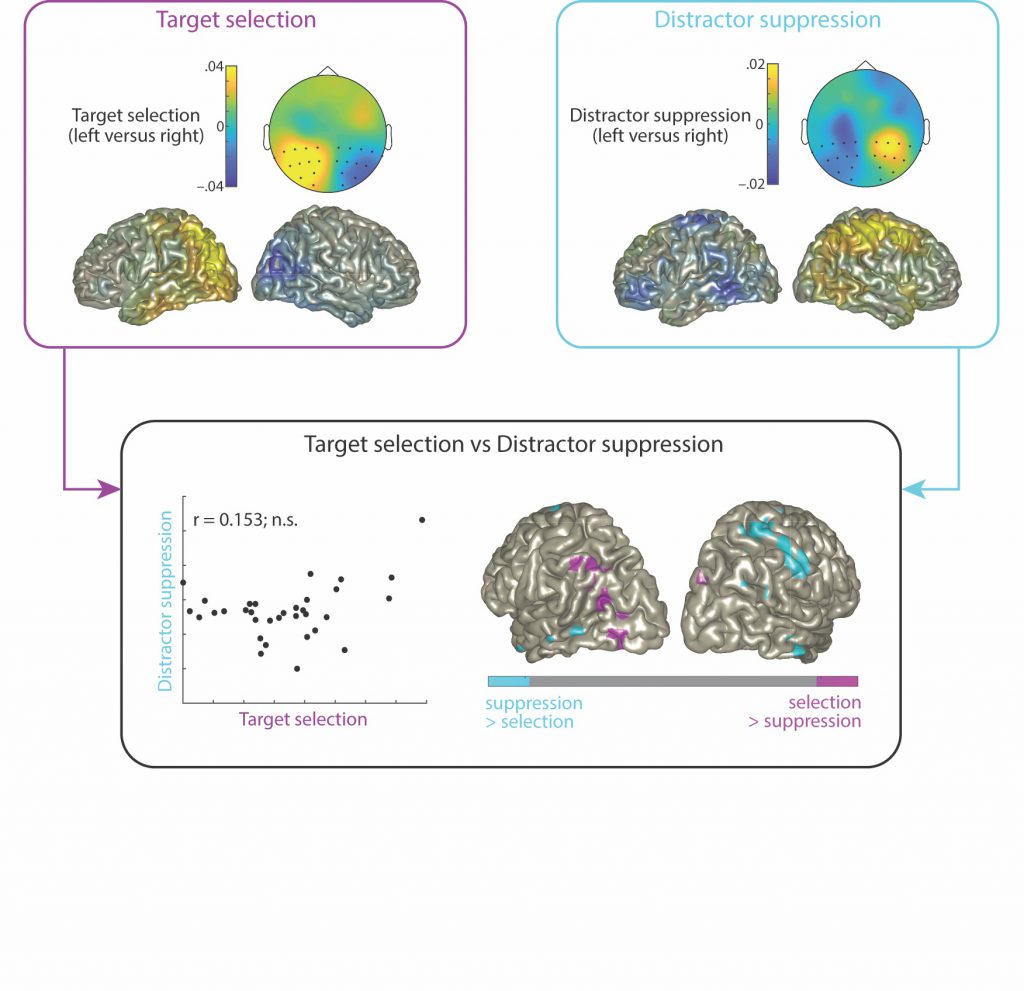

Wöstmann, Alavash and Obleser demonstrate that alpha oscillations in the human brain implement distractor suppression independent of target selection.

In theory, the ability to selectively focus on relevant objects in our environment bases on selection of targets and suppression of distraction. As it is unclear whether target selection and distractor suppression are independent, we designed an Electroencephalography (EEG) study to directly contrast these two processes.

Participants performed a pitch discrimination task on a tone sequence presented at one loudspeaker location while a distracting tone sequence was presented at another location. When the distractor was fixed in the front, attention to upcoming targets on the left versus right side induced hemispheric lateralisation of alpha power with relatively higher power ipsi- versus contralateral to the side of attention.

Critically, when the target was fixed in front, suppression of upcoming distractors reversed the pattern of alpha lateralisation, that is, alpha power increased contralateral to the distractor and decreased ipsilaterally. Since the two lateralized alpha responses were uncorrelated across participants, they can be considered largely independent cognitive mechanisms.

This was further supported by the fact that alpha lateralisation in response to distractor suppression originated in more anterior, frontal cortical regions compared with target selection (see figure).

The paper is also available as preprint here.

Wöstmann, Schmitt and Obleser demonstrate that closing the eyes enhances the attentional modulation of neural alpha power but does not affect behavioural performance in two listening tasks

Does closing the eyes enhance our ability to listen attentively? In fact, many of us tend to close their eyes when listening conditions become challenging, for example on the phone. It is thus surprising that there is no published work on the behavioural or neural consequences of closing the eyes during attentive listening. In the present study, we demonstrate that eye closure does not only increase the overall level of absolute alpha power but also the degree to which auditory attention modulates alpha power over time in synchrony with attending to versus ignoring speech. However, our behavioural results provide evidence for the absence of any difference in listening performance with closed versus open eyes. The likely reason for this is that the impact of eye closure on neural oscillatory dynamics does not match alpha power modulations associated with listening performance precisely enough (see figure).

The paper is available as preprint here.

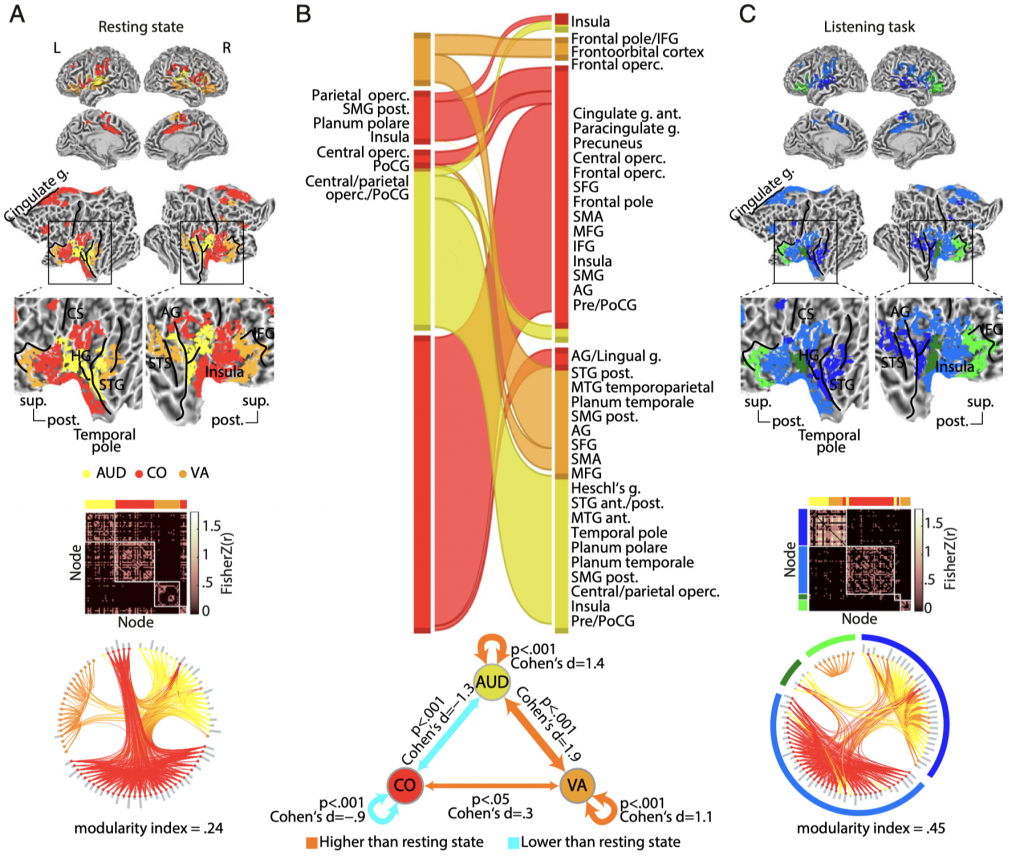

How brain areas communicate shapes human communication: The hearing regions in your brain form new alliances as you try to listen at the cocktail party

Obleserlab Postdocs Mohsen Alavash and Sarah Tune rock out an intricate graph-theoretical account of modular reconfigurations in challenging listening situations, and how these predict individuals’ listening success.

Available online now in PNAS! (Also, our uni is currently featuring a German-language press release on it, as well as an English-language version)

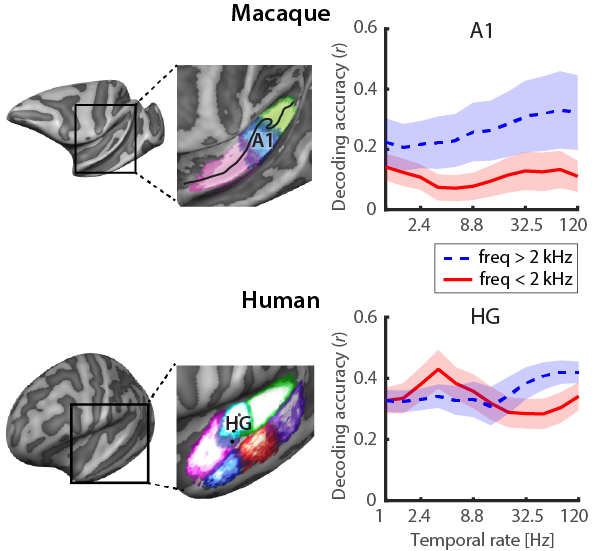

In a new comparative fMRI study just published in Cerebral Cortex, AC postdoc Julia Erb and her collaborators in the Formisano (Maastricht University) and Vanduffel labs (KU Leuven) provide us with novel insights into speech evolution. These data by Erb et al. reveal homologies and differences in natural sound-encoding in human and non-human primate cortex.

From the Abstract: “Understanding homologies and differences in auditory cortical processing in human and nonhuman primates is an essential step in elucidating the neurobiology of speech and language. Using fMRI responses to natural sounds, we investigated the representation of multiple acoustic features in auditory cortex of awake macaques and humans. Comparative analyses revealed homologous large-scale topographies not only for frequency but also for temporal and spectral modulations. Conversely, we observed a striking interspecies difference in cortical sensitivity to temporal modulations: While decoding from macaque auditory cortex was most accurate at fast rates (> 30 Hz), humans had highest sensitivity to ~3 Hz, a relevant rate for speech analysis. These findings suggest that characteristic tuning of human auditory cortex to slow temporal modulations is unique and may have emerged as a critical step in the evolution of speech and language.”

The paper is available here. Congratulations, Julia!

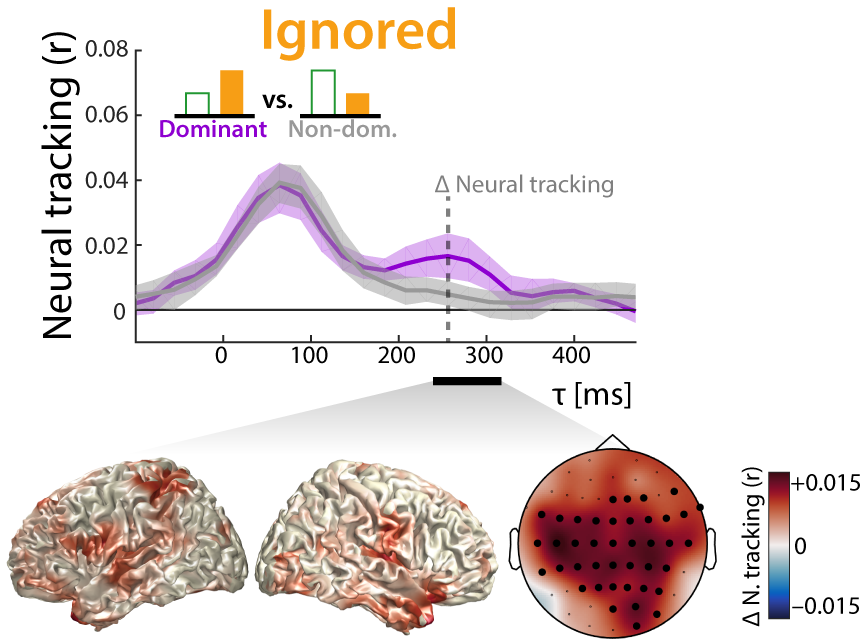

Listening requires selective neural processing of the incoming sound mixture, which in humans is borne out by a surprisingly clean representation of attended-only speech in auditory cortex. How this neural selectivity is achieved even at negative signal-to-noise ratios (SNR) remains unclear. We show that, under such conditions, a late cortical representation (i.e., neural tracking) of the ignored acoustic signal is key to successful separation of attended and distracting talkers (i.e., neural selectivity). We recorded and modeled the electroencephalographic response of 18 participants who attended to one of two simultaneously presented stories, while the SNR between the two talkers varied dynamically between +6 and −6 dB. The neural tracking showed an increasing early-to-late attention-biased selectivity. Importantly, acoustically dominant (i.e., louder) ignored talkers were tracked neurally by late involvement of fronto-parietal regions, which contributed to enhanced neural selectivity. This neural selectivity, by way of representing the ignored talker, poses a mechanistic neural account of attention under real-life acoustic conditions.

The paper is available here.